Build Data Factories, Not Data Warehouses

May 18, 2022

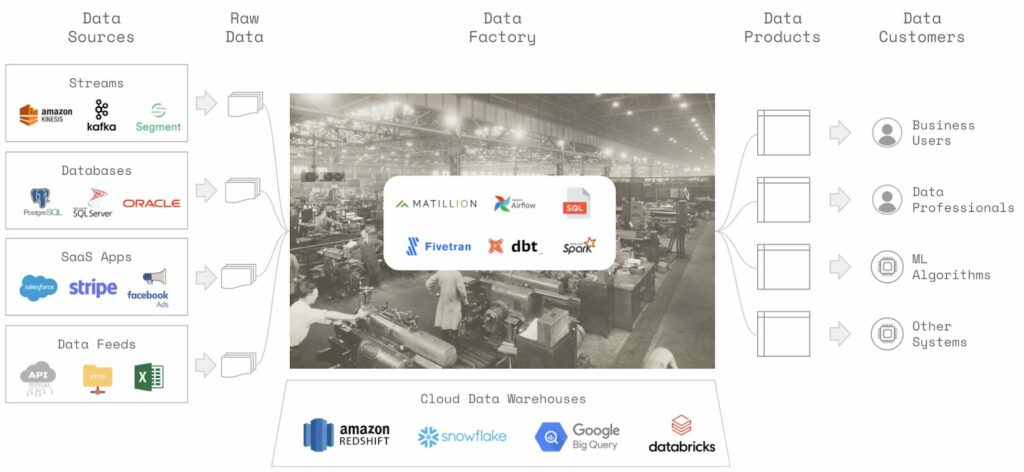

We aren’t loading indistinguishable pallets of data into virtual warehouses, where we stack them in neat rows and columns and then forklift them out onto delivery trucks.

Instead, we feed raw data into factories filled with complex assembly lines connected by conveyor belts. Our factories manufacture customized and evolving data products for various internal and external customers.

As a business operating a data factory, our primary concerns should be:

- Is the factory producing high-quality data products?

- How much does it cost to run our factory?

- How quickly can we adapt our factory to changing customer needs?

Cloud data warehouses like Amazon Web Services’ Redshift, Snowflake, Google’s BigQuery, and Databricks have reduced data factory operating costs. Orchestration tools like Airflow and data transformation frameworks like dbt have made redesigning components in our factory easier.

But we often miss data quality issues, resulting in bad decisions and broken product experiences. Or they are caught by end users at the last minute leading to fire drills and eroded trust.

Data Quality Control Priorities

To establish data quality control in our metaphorical factory, we could test at four points:

- The raw materials that arrive in our factory.

- The machine performance at each step in the line.

- The work-in-progress material that lands between transformation steps.

- The final products we ship to internal or external customers.

These testing points are not equally important. As a factory operator, the most critical quality test is at the end of the line. Factories have dedicated teams that sample finished products and ensure they meet rigorous quality standards.

The same holds for data. We don’t know if the data we produce is high quality until we have tested the finished product. For example:

- Did a join introduce duplicate rows?

- Did a malformed column cause missing values?

- Are timestamps inconsistently recorded?

- Has a change in query logic affected business metrics?

After validating the quality of our final product, we should ensure we are consuming high-quality raw materials. Identifying defects in raw data arriving into the factory will save us time and effort in root-causing issues later.

Insufficient Investments

Unfortunately, to date, most investment in testing our data factories has been the equivalent of evaluating machine performance or visualizing floor plans:

- We monitor data infrastructure for uptime and responsiveness.

- We monitor Airflow tasks for exceptions and run times.

- We apply rule-based tests with dbt to check the logic of transformations.

- We analyze data lineage to build complex maps of data factory floors.

These activities are helpful, but we have put the cart before the horse! We should first ensure that our factory produces and ingests high-quality data. From conversations I have had with hundreds of data teams, I believe we have failed to do so for three reasons:

1. We use the tools we have at hand.

Engineering teams have robust tools and best practices for monitoring the operations of web and backend applications. We can use these existing tools to monitor the infrastructure and orchestration for our data factory. However, these tools are incapable of monitoring the data itself.

2. We have tasked machine operators with quality control.

The burden of data quality often falls on the backs of the data and analytics engineers operating the machines in the factory. They are experts in the tools and logic used to transform the data. They may write tests to ensure their transformations are correct, but they can overlook upstream or downstream issues from their processing.

3. Testing data well is difficult.

Our data factories produce thousands of incredibly diverse data tables with hundreds of meaningful columns and segments. The data in these tables constantly changes for reasons that range from “expected” to “entirely out of our control.” Simplistic testing strategies frequently miss real issues, and complex strategies are hard to maintain. Poorly calibrated tests can spam users with false-positive alerts, leading to alert fatigue.

Data Quality Control Needs

We need purpose-built tools to monitor and assess the quality of data arriving into or exiting our data factories.

We should place these tools into the hands of data consumers — the subject matter experts who deeply care about the quality of the data they use. These consumers should be able to quickly test their data and monitor their key metrics, with or without code.

Our data quality tools must scale to cover thousands of tables, with billions of rows, across hundreds of teams, in daily batch processes or real-time flows.

The algorithms used should be flexible enough to handle data from diverse applications and industries. They should gracefully adapt to different tabular structures, data granularity and table update mechanics. We should automate testing to avoid burdening data consumers with busy work.

We should avoid creating alert fatigue by minimizing false positives through notification controls, feedback loops and robust predictive models. When issues arise, we should visually explain them by leveraging context in the data and upstream data generation processes.

The Future of Data Quality

Organizations today can capture, store and query a remarkable breadth of data relevant to their business. They can democratize access to this data so that analyses, processes or products can depend on it.

Data teams operate complex data factories to service the data needs of their organization. But they are often unable to control the quality of data produced. Data teams risk losing trust and becoming sidelined if they do not catch and address data quality issues before downstream users.

Data leaders must take responsibility for data quality by defining and enforcing quality control standards. They need tools and processes that test data in ways that scale, both with the data itself and the people involved in producing and consuming it.

These are complex challenges, but a tremendous amount of innovation is happening in the data community to address them. I look forward to a future where our data factories are transparent, fast, inexpensive, and produce data of outstanding quality!

I’d like to thank Anthony Goldbloom, Chris Riccomini, Dan Siroker, D.J. Patil, John Joo, Kris Kendall, Monica Rogati, Pete Soderling, Taly Kanfi and Vicky Andonova for their feedback and suggestions.

Originally published on The New Stack.

Get Started

Meet with our expert team and learn how Anomalo can help you achieve high data quality with less effort.