Effective Dynamic Data Testing Strategies

November 18, 2020

Data is rarely static, so why should data tests be?

When testing data, our first instinct is to reach for perfection. Can’t we write down a clear set of rules that govern exactly how our data should behave, just like we do when testing software?

Of course we can’t! Data isn’t software, and shouldn’t be tested in the same way.

When testing your data, there are far more factors that are out of your control.

The reality is that the factors affecting your data that are out of your control will usually far outweigh those that are in your control.

As your organization grows your business decisions, processes, products and code can all change your data in unexpected ways. And your data is truly at the mercy of many external factors. From how users behave, to what events occur, to the combined actions of competitors, suppliers or market forces.

To test data effectively we need tests that adapt with these forces.

In this post, we outline a framework for data testing, from static tests that can be written in SQL, to dynamic tests that require statistics or machine learning. Then we compare both approaches with an example from COVID-19 data in the EU.

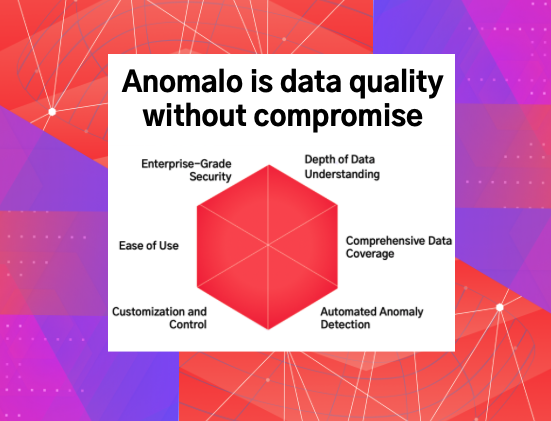

In practice, data can be tested with the following four broad approaches:

The relative importance of static and dynamic data testing strategies.

Fixed rules make a statement in absolute terms about a dataset, such as “this column is never NULL” or “this string always matches a pattern.” These tests are great when your data must be perfect in some clear and known way.

Specified ranges require a computed number to be within a pre-determined interval, such as “values should be zero for 1–3% of records” or “the mean of a column should be between 13 and 16”. These can be used when you know in advance that a key metric or data statistic should never drift outside of a range.

Predicted ranges are just like specified ranges, except the range is predicted by a time series model. The user can control how much uncertainty should be in the predicted interval, such as “the mean is within a 95% predicted confidence interval”. These are more powerful tests that can find any significant change in key metrics or summary statistics.

Unsupervised detection is the most sophisticated approach, where anomalous changes are found in an important dataset. All that is required is that the user specify what data is important. Such tests can identify unexpected changes that you hadn’t thought to test for. Stay tuned for future posts on unsupervised detection.

An example of each of the four types of data testing strategies on EU CDC COVID-19 data.

Dynamic testing strategies such as predicted ranges or unsupervised detection have some significant advantages. They are easier to set up and easier to maintain over time. They can also be used to test any data for any condition, regardless of the current quality of the data.

Of course, there are still very good reasons to use static tests. They are powerful when you know exactly how your data should behave, and want to be alerted even if the data varies only slightly from this expectation.

But relying only on static tests leads either to poor test coverage — where the majority of important data is not well tested, or to a high maintenance burden that will prevent a testing strategy from being sustainable.

Let’s consider an example. The European CDC provides COVID-19 data hosted in BigQuery here. In addition to statistics like cases and deaths broken out by country and date, this dataset also tracks intensive care patients.

But many of the intensive care records appear to be NULL. For example, in the BigQuery console we find that 98% are NULL:

Suppose we are back at July 1st, and we want to set manual bounds for the percent of NULL values in the cumulative_intensive_care_patients column.

We review the percentages by day, and decide on a bound of 97.5% — 98.5%:

Fast-forward to August 6th, and the NULL percentage has dropped below our initial guess.

We investigate and find that this is a natural trend due to expanding data collection. Worried about getting more false positive alerts, we widen our interval to 97% to 99%, and everything looks good for a few months:

But then a sudden spike occurs on November 8th that we miss entirely:

Instead, if we had used a predicted range test, this data quality issue would have been caught immediately:

Behind the scenes, this test uses a time series model which dynamically adjusts to the data. The model controls for changes in trend (blue) and seasonality (purple). It then produces a well calibrated predicted range (green). This allows us to clearly identify the anomaly (red):

Predicted range tests should:

- Control for changes in trend and seasonality, without over-reacting

- Adjust for holidays, which can cause sudden spikes or dips in metrics

- Identify and treat historical outliers, so they do not unduly influence future predictions

- Accurately predict an interval of possible outcomes based on historical variance in the series

Once these factors are accounted for, predicted range tests are a very powerful data testing strategy.

To effectively test their data, companies should use a portfolio of testing strategies. Static tests such as fixed rules or specified ranges should be used only when there are clearly known expectations about data that is already of high quality.

The majority of data tests should be dynamic to ensure high data test coverage that adapts as your data changes without requiring constant maintenance.

We are building a data testing product with a strong emphasis on dynamic testing over at Anomalo. So, if you’re interested in easily enabling dynamic tests for your data, head to our site to learn more or request a demo.

Categories

- Product Updates

Ready to Trust Your Data? Let’s Get Started

Meet with our team to see how Anomalo transforms data quality from a challenge into a competitive edge.